Three and a Half Weeks of AI Coding Challenges

| Timeline: | Sep 26 - Oct 19, 2016 | |

| Languages Used: | Python, Java, TensorFlow, Keras | |

| School: | Colorado College | |

| Course: | CP365: Artificial Intelligence |

Let me set the scene: Colorado College, the infamous Block Plan, and me, armed with caffeine, optimism, and a vague understanding of Python. In just 3.5 weeks (yes, you read that right), I tackled a semester’s worth of Artificial Intelligence projects. Here are the three that left the biggest mark and, occasionally, a dent in my sanity.

The most fun challenges

1. TensorFlow Set Solver: Can a Neural Net Play SET Better Than Me?

SET is a card game that’s equal parts logic puzzle and optical illusion. I figured, why not make a neural network do the heavy lifting?

- What I built: A Python program that scans a photo of a SET board, detects cards, and tries to identify them using two neural networks: one for visibility (is there a card here?) and one for recognition (which card is it?).

- How it works: The code slides a window over the board image, uses the visibility model to spot cards, and then the recognition model to guess which card is present. It tallies up “votes” for each card position and prints out its best (and second-best) guesses.

- What I learned: Training neural nets is hard. Getting them to work on real, messy images is even harder. But seeing the model actually recognize cards (sometimes!) was a huge win.

Side note: Debugging image data is like herding cats. If you ever want to feel humble, try it.

Here’s the core solving logic:

1def solve_set_grid(db_file_path, grid_image_path):

2 db = CardDatabase(db_file_path)

3 recognition_nn = CardRecognitionNN(db)

4 recognition_model = recognition_nn.train()

5 visibility_nn = CardVisibilityNN(db)

6 visibility_model = visibility_nn.train()

7

8 grid_image = misc.imread(grid_image_path)

9 WINDOW_WIDTH = 30

10 WINDOW_HEIGHT = 40

11 grid_width = len(grid_image[0])

12 grid_height = len(grid_image)

13

14 # there are 9 locations on the board, each with 9 possible votes

15 card_votes = np.zeros((9,9))

16

17 # start looping through image with a window

18 for row in range(0, grid_height-WINDOW_HEIGHT, 2):

19 for col in range(0, grid_width-WINDOW_WIDTH, 2):

20 # build window from pixels

21 window = []

22 for i in range(WINDOW_HEIGHT):

23 window.append([])

24 for j in range(WINDOW_WIDTH):

25 window[i].append(grid_image[row+i][col+j])

26 window = np.array(window)

27

28 # run window through visibility model

29 window = window.reshape(1, 40, 30, 3)

30 visible = visibility_model.predict(window)

31 if(visible[0][1] == 1):

32 # the window contains a card!

33 # run through recognition model

34 recognized = recognition_model.predict(window)[0]

35 card_idx = get_card_idx_from_window_bounds(col, col+WINDOW_WIDTH,

36 row, row+WINDOW_HEIGHT, grid_width, grid_height)

37 card_votes[card_idx] += recognized

38

39 # make final decisions

40 card_decisions = card_votes.argmax(axis=1)

41 for i in range(len(card_decisions)):

42 print "Card %d:" % i,

43 print CardDatabase.get_description(card_decisions[i]+9)

And the neural network architecture:

setsolver_cnn.mmd

1---

2config:

3 theme: 'base'

4 themeVariables:

5 primaryColor: '#333'

6 actorTextColor: '#fff'

7 primaryTextColor: '#aaa'

8 primaryBorderColor: '#aaa'

9 lineColor: '#F8B229'

10---

11sequenceDiagram

12 participant I as Input Image

13 participant C1 as Conv2D

14 participant R1 as ReLU

15

16 Note right of I: 40x30x3

17 I->>C1: Pass Tensor

18 Note right of C1: 32 filters, 3x3 kernel

19 C1->>R1: Apply Activation

1---

2config:

3 theme: 'base'

4 themeVariables:

5 primaryColor: '#333'

6 actorTextColor: '#fff'

7 primaryTextColor: '#aaa'

8 primaryBorderColor: '#aaa'

9 lineColor: '#F8B229'

10---

11sequenceDiagram

12 participant C2 as Conv2D

13 participant R2 as ReLU

14 participant F as Flatten Layer

15 participant Dr as Dropout

16

17 Note over C2: Input from previous stage (ReLU)

18 C2->>R2: Apply Activation

19 Note right of C2: 64 filters, 3x3 kernel

20 R2->>F: Pass Feature Map

21 F->>Dr: Pass Flattened Vector

22 Note over Dr: Rate 0.5

1---

2config:

3 theme: 'base'

4 themeVariables:

5 primaryColor: '#333'

6 actorTextColor: '#fff'

7 primaryTextColor: '#aaa'

8 primaryBorderColor: '#aaa'

9 lineColor: '#F8B229'

10---

11sequenceDiagram

12 participant De as Dense Layer

13 participant S as Softmax

14 participant O as Output

15

16 Note over De: Input from previous stage (Dropout)

17 De->>S: Apply Activation

18 Note right of De: Output Size

19 S->>O: Pass Probabilities

20 Note over O: Card Classification

1class ConvolutionalNN:

2 def create_model(self, in_shape, out_size):

3 model = Sequential()

4

5 # First convolutional layer

6 model.add(Convolution2D(32, 3, 3, border_mode='valid', input_shape=in_shape))

7 model.add(Activation('relu'))

8

9 # Second convolutional layer

10 model.add(Convolution2D(64, 3, 3))

11 model.add(Activation('relu'))

12

13 model.add(Flatten())

14 model.add(Dropout(0.5))

15

16 model.add(Dense(out_size))

17 model.add(Activation('softmax'))

18

19 model.compile(loss='categorical_crossentropy', optimizer="adam", metrics=['accuracy'])

20 return model

2. Othello AI: Bots, Boards, and the Joy of Getting Crushed

under*](/project/ai-block-plan/images/othello.jpg)

Othello a.k.a. Reversi board game. Image: Wikimedia under CC BY-SA 4.0

Othello (a.k.a. Reversi) is a classic strategy game, and for this project, I went all-in: Java GUI, multiple AI bots, and a whole lot of game logic.

- What I built: A full Othello game engine with a graphical board, support for human and AI players, and several bot strategies (random, greedy, and minimax).

- Cool features: The minimax bot tries to look ahead and make the smartest move, while the greedy bot just grabs the most pieces it can each turn. You can play against the computer or watch bots battle it out.

- What I learned: Implementing minimax was a brain workout, but seeing the bots improve (and sometimes beat me) was super satisfying. Also, GUIs are fun until they aren’t.

Fun fact: My minimax bot once lost to the random bot. I still have trust issues.

Here’s the core minimax implementation with alpha-beta pruning:

1public OthelloMove makeMove(OthelloBoard board, int move) {

2 Metrics.reset();

3 timer.start();

4 if(playerColor == OthelloBoard.BLACK) {

5 alpha = -Integer.MAX_VALUE;

6 beta = Integer.MAX_VALUE;

7 } else {

8 alpha = Integer.MAX_VALUE;

9 beta = -Integer.MAX_VALUE;

10 }

11

12 depthLimit = INITIAL_DEPTH_LIMIT;

13 Node root = new Node(board, null, currentMove, null);

14 q = new LinkedList<Node>();

15

16 dfs(root);

17 while(!q.isEmpty() && !timer.hasFinished()) {

18 Node nextNode = q.poll();

19 if(nextNode.visited)

20 continue;

21 if(nextNode.depth == currentMove + depthLimit) {

22 depthLimit += DEPTH_INCREMENT;

23 }

24 dfs(nextNode);

25 }

26

27 // Choose best scored child as next move

28 Node bestChild = root.children.get(0);

29 for(Node child : root.children) {

30 if((playerColor == OthelloBoard.BLACK && child.score > bestChild.score) ||

31 (playerColor == OthelloBoard.WHITE && child.score < bestChild.score)) {

32 bestChild = child;

33 }

34 }

35

36 currentMove+=2;

37 previousMove = bestChild;

38 return bestChild.move;

39}

And the evaluation function that weights corners and sides:

1private int estimateScore(OthelloBoard b) {

2 int score = b.getBoardScore();

3 // check corners

4 if(b.board[0][0] > 0) {

5 score += CORNER_WEIGHT*(b.board[0][0] == 1 ? 1 : -1);

6 }

7 if(b.board[0][b.size-1] > 0) {

8 score += CORNER_WEIGHT*(b.board[0][b.size-1] == 1 ? 1 : -1);

9 }

10 if(b.board[b.size-1][b.size-1] > 0) {

11 score += CORNER_WEIGHT*(b.board[b.size-1][b.size-1] == 1 ? 1 : -1);

12 }

13 if(b.board[b.size-1][0] > 0) {

14 score += CORNER_WEIGHT*(b.board[b.size-1][0] == 1 ? 1 : -1);

15 }

16 // check sides

17 for(int i=0; i<b.size; i++) {

18 if(b.board[i][0] > 0) {

19 score += SIDE_WEIGHT*(b.board[i][0] == 1 ? 1 : -1);

20 }

21 if(b.board[0][i] > 0) {

22 score += SIDE_WEIGHT*(b.board[0][i] == 1 ? 1 : -1);

23 }

24 if(b.board[b.size-1][i] > 0) {

25 score += SIDE_WEIGHT*(b.board[b.size-1][i] == 1 ? 1 : -1);

26 }

27 if(b.board[i][b.size-1] > 0) {

28 score += SIDE_WEIGHT*(b.board[i][b.size-1] == 1 ? 1 : -1);

29 }

30 }

31 return score;

32}

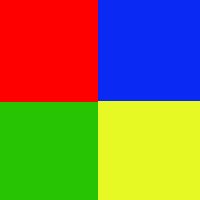

3. Genetic Algorithm Art: Evolving Images, One Polygon at a Time

This one was part science, part digital art experiment. The goal? Use a genetic algorithm to “evolve” a set of colored polygons that mimic a target image.

The starting image from which the genetic algorithm could evolve into the target.

- What I built: A Java program that starts with a population of random polygon arrangements and, through crossover and mutation, gradually makes them look more like a given picture.

- How it works: Each “solution” is a set of polygons. The fitness function compares the generated image to the target, and the best solutions get to “reproduce.” Over thousands of generations, the results get spookily close to the original.

- What I learned: Evolutionary algorithms are mesmerizing to watch. Sometimes, the program would get stuck in a rut, but with a tweak to mutation rates, it would suddenly leap forward.

Pro tip: If you want to feel like a mad scientist, watch your computer “paint” with polygons at 2 a.m.

Here’s the core genetic algorithm loop:

1public void runSimulation() {

2 currentEpoch = 0;

3 while(normalizeFitnesses() &&

4 population[fittestIndex].getFitness() < 0.95f &&

5 currentEpoch < MAX_EPOCHS) {

6 crossoverCount = 0;

7 mutationCount = 0;

8 GASolution[] bestNextEpoch = null;

9 float maxPopulationFitness = 0;

10

11 for(int n=0; n<POPULATION_BRANCHES; n++) {

12 GASolution[] newPopulation = new GASolution[POPULATION_SIZE];

13 float avgPopulationFitness = 0;

14 Random rand = new Random();

15

16 for(int i=0; i<POPULATION_SIZE; i++) {

17 float r = rand.nextFloat();

18 if(r <= CROSSOVER_RATE) {

19 // crossover

20 newPopulation[i] = newCrossover();

21 } else {

22 // just add the same individual

23 newPopulation[i] = deepCopy(population[i]);

24 }

25 avgPopulationFitness += newPopulation[i].getFitness();

26 }

27 avgPopulationFitness /= POPULATION_SIZE;

28 if(avgPopulationFitness > maxPopulationFitness) {

29 bestNextEpoch = newPopulation;

30 maxPopulationFitness = avgPopulationFitness;

31 }

32 }

33

34 if(currentEpoch % 10 == 0) {

35 System.out.printf("Epoch %d => Avg Fitness: %.2f, Crossovers: %d, Mutations: %d\n",

36 currentEpoch, maxPopulationFitness, crossoverCount/POPULATION_BRANCHES,

37 mutationCount/POPULATION_BRANCHES);

38 canvas.setImage(population[fittestIndex]);

39 frame.repaint();

40 }

41 population = bestNextEpoch;

42 currentEpoch++;

43 }

44}

And the fitness function that compares generated images to the target:

1public float calcFitness(GASolution solution) {

2 Random rand = new Random();

3 BufferedImage genPicture = solution.getImage();

4 float avgDistance = 0;

5 for(int i=0; i<FITNESS_SAMPLE_SIZE; i++) {

6 int x = rand.nextInt(solution.width);

7 int y = rand.nextInt(solution.height);

8 Color a = new Color(realPicture.getRGB(x,y));

9 Color b = new Color(genPicture.getRGB(x,y));

10 // calculate euclidean distance

11 float distance = (float) Math.sqrt(Math.pow(a.getRed() - b.getRed(), 2) +

12 Math.pow(a.getBlue() - b.getBlue(), 2) +

13 Math.pow(a.getGreen() - b.getGreen(), 2));

14 avgDistance += (distance / MAX_DISTANCE);

15 }

16 avgDistance /= FITNESS_SAMPLE_SIZE;

17 return (1 - avgDistance);

18}

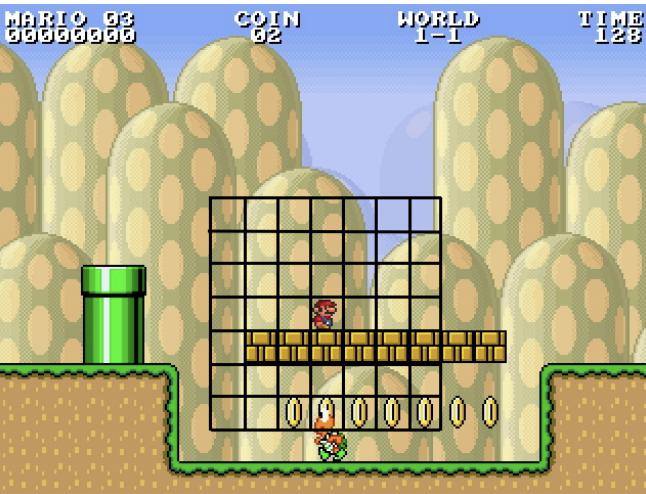

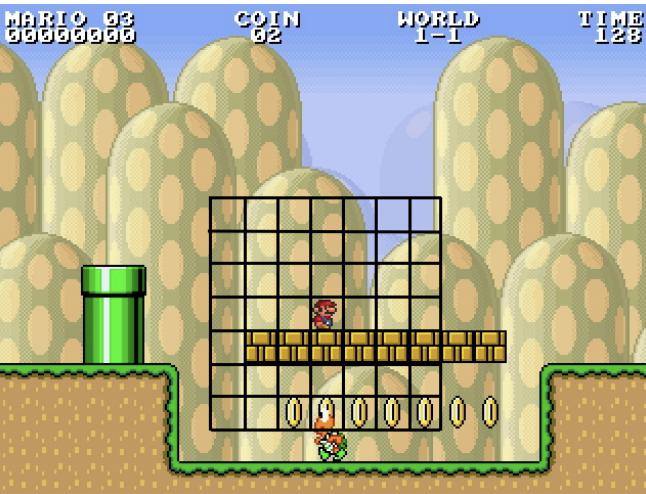

4. MarioAI: Teaching a Bot to Run, Jump, and (Sometimes) Survive

Who hasn’t wanted to build their own Mario bot? For this project, I dove into the MarioAI competition framework and tried to teach an agent to play Super Mario Bros. (or at least, not immediately fall into a pit).

Java agent controlling Mario to run, jump, and survive in the MarioAI competition framework.

- What I built: A Java agent that controls Mario, using simple logic to decide when to run, jump, and speed up. The agent tries to jump over obstacles and keep moving right, sometimes with hilarious results.

- How it works: The bot checks if Mario can jump or is in the air, and then presses the jump and speed buttons accordingly. It’s not exactly AlphaGo, but it gets the job done (most of the time).

- What I learned: Platformer AI is tricky! Timing jumps and reacting to the environment is harder than it looks. Watching the bot fail in new and creative ways was half the fun.

Side quest: If you ever want to appreciate human reflexes, watch your bot miss the same jump 20 times in a row.

Here’s the core decision-making logic:

1public boolean[] getAction() {

2 int distFromGap = closestGapDistance();

3 if(distFromGap > -1 && distFromGap <= 2 && !hasInitiatedJumpOverGap) {

4 // if we detect a gap coming up soon...

5 if(!isMarioOnGround) {

6 // stop moving right until we hit the ground

7 if(leftButtonCounter < 5) {

8 action[Mario.KEY_LEFT] = true;

9 leftButtonCounter++;

10 } else {

11 action[Mario.KEY_LEFT] = false;

12 }

13 action[Mario.KEY_RIGHT] = false;

14 action[Mario.KEY_JUMP] = false;

15 } else if(isMarioAbleToJump && (rightUpDistFromEnemy == -1 || rightUpDistFromEnemy > DIST_THRESHOLD)) {

16 // once we hit the ground, jump and go right!

17 leftButtonCounter = 0;

18 hasJumped = false;

19 hasLanded = false;

20 groundCounter = 0;

21 hasInitiatedJumpOverGap = true;

22 action[Mario.KEY_LEFT] = false;

23 action[Mario.KEY_RIGHT] = true;

24 action[Mario.KEY_SPEED] = action[Mario.KEY_JUMP] = true;

25 }

26 } else if(hasInitiatedJumpOverGap && !hasLanded) {

27 // if we're still jumping over the gap...

28 // keep jumping and moving right

29 action[Mario.KEY_LEFT] = false;

30 action[Mario.KEY_RIGHT] = true;

31 action[Mario.KEY_SPEED] = action[Mario.KEY_JUMP] = true;

32 if(!isMarioOnGround) {

33 groundCounter = 0;

34 hasJumped = true;

35 } else if(hasJumped) {

36 if(distFromGap > 0) {

37 hasJumped = false;

38 hasLanded = true;

39 hasInitiatedJumpOverGap = false;

40 groundCounter = 0;

41 } else if(groundCounter < 15) {

42 groundCounter++;

43 } else {

44 // yay! we made the jump!

45 hasJumped = false;

46 hasLanded = true;

47 hasInitiatedJumpOverGap = false;

48 groundCounter = 0;

49 }

50 }

51 } else {

52 // otherwise, if no gap...

53 // always shoot

54 if(shootCounter < 15) {

55 action[Mario.KEY_SPEED] = true;

56 shootCounter++;

57 } else if(shootCounter < 20) {

58 action[Mario.KEY_SPEED] = false;

59 shootCounter++;

60 } else {

61 shootCounter = 0;

62 }

63

64 // see if we're next to a persistent obstacle

65 if(!isEmpty(9,10) || !isEmpty(10,10) || !isEmpty(11,10)) {

66 obstacleCounter++;

67 } else {

68 obstacleCounter = 0;

69 }

70

71 int leftUpDistFromEnemy = closestEnemyDistance(LEFT|UP);

72 int rightUpDistFromEnemy = closestEnemyDistance(RIGHT|UP);

73 int leftDownDistFromEnemy = closestEnemyDistance(LEFT|DOWN);

74 int rightDownDistFromEnemy = closestEnemyDistance(RIGHT|DOWN);

75

76 // if there are enemies on the down right...

77 if(rightDownDistFromEnemy > -1 && rightDownDistFromEnemy <= DIST_THRESHOLD) {

78 // if there aren't enemies on the up right...

79 if(rightUpDistFromEnemy == -1 || rightUpDistFromEnemy > DIST_THRESHOLD) {

80 // move right and jump

81 action[Mario.KEY_RIGHT] = true;

82 action[Mario.KEY_LEFT] = false;

83 action[Mario.KEY_JUMP] = isMarioAbleToJump || !isMarioOnGround;

84 }

85 } else {

86 // move right and jump

87 action[Mario.KEY_RIGHT] = true;

88 action[Mario.KEY_LEFT] = false;

89 action[Mario.KEY_JUMP] = isMarioAbleToJump || !isMarioOnGround;

90 if(obstacleCounter > 12) {

91 // jump over obstacle

92 action[Mario.KEY_JUMP] = isMarioAbleToJump || !isMarioOnGround;

93 }

94 }

95 }

96 return action;

97}

And the gap detection logic:

1public int closestGapDistance() {

2 int count = 0;

3 int lastBrickX = 0;

4 int lastEmptyX = 0;

5 int dist = -1;

6 for(int y=10; y<19; y++) {

7 count = 0;

8 lastBrickX = -1;

9 lastEmptyX = 0;

10 for(int x=10; x<19; x++) {

11 if(!isEmpty(y,x) && !hasEnemy(y,x)) {

12 lastBrickX = x;

13 } else if(isEmpty(y,x) && lastBrickX > -1) {

14 boolean allTheWayDown = true;

15 for(int w=y+1; w<19; w++) {

16 if(!isEmpty(w,x)) {

17 allTheWayDown = false;

18 break;

19 }

20 }

21 if(allTheWayDown) {

22 if(lastEmptyX == x-1) {

23 count++;

24 if(count == 3) {

25 int pt = x-3;

26 dist = (int)Math.abs(pt-9);

27 return dist;

28 }

29 } else {

30 count = 1;

31 }

32 lastEmptyX = x;

33 }

34 }

35 }

36 }

37 return dist;

38}

Other Projects from this Course

Here’s a quick rundown of the other projects I tackled during those 3.5 weeks:

- Linear Regression: Implemented linear regression for data analysis tasks.

- Clustering: K-means clustering on movie ratings data.

- AISearch: Search algorithms (BFS, DFS, A*, etc.) for solving sliding puzzles and other problems.

Source Code

Reflections & Takeaways

- AI is messy: Real-world data is never as clean as textbook examples. Embrace the chaos.

- Bots are humbling: Sometimes your “smart” AI will lose to random chance. That’s life (and debugging).

- Evolution is cool: Watching solutions improve over time is weirdly addictive.

If you’re curious about the code or want to see some of the (occasionally hilarious) results, try running my code and let me know how it goes in the comments! And remember: every bug is just an undocumented feature waiting to be discovered.

No neural networks were harmed (permanently) in the making of these projects.